I've been focusing a lot on Java performance lately and ran across a Programmers StackExchange post asking the most efficient way to tokenize a string in Java. It struck a nerve with me because the most common way to do this is to use String#split. But the split method takes as its tokenization delimiter a string that gets compiled into a regular expression. Which seems nice when you need it, but likely to be rather inefficient if all you need is to split on a character, such as a space. Then I looked up the old StringTokenizer class which I used to use years and years ago. And its Javadoc has this interesting tidbit:

StringTokenizer is a legacy class that is retained for compatibility reasons although its use is discouraged in new code. It is recommended that anyone seeking this functionality use the split method of String or the java.util.regex package instead.

Strangely, however, the accepted answer for the StackExchange question links to a blog post that shows benchmarks claiming that StringTokenizer is faster than String#split. So why shouldn't I use StringTokenizer if I don't need a regex to split a string into fields?

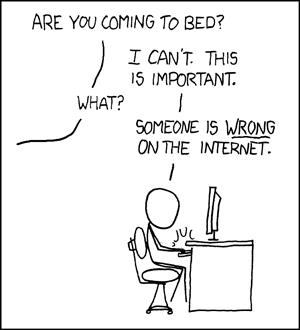

/* ---[ Someone is wrong on the internet ]--- */

Well, that blog post needs to be updated for two reasons:

- it used Java 6, so we need to see whether things are different in Java 7 and 8

- microbenchmarks are hard to do correctly and the author used questionable means (for example - there is not much warm up time and his timings are awfully short)

The difficulty of doing accurate microbenchmarks has been greatly assuaged by the creation of the JMH benchmark tool. If you haven't adopted it yet, put it at the top your technical TODO list. The documentation is excellent and it comes with many useful samples.

/* ---[ Splitting a Java string into tokens ]--- */

Of the techniques used in the original post, I am interested in four candidates:

- tokenize the string yourself with

String#indexOf - use

String#split - use the almost deprecated

StringTokenizer - use Guava's Splitter

Here are the reported outcomes of the earlier measurements for these 4 options:

IndexOf: 50ms

StringTokenizer: 89ms

GuavaSplit: 109ms

Split: 366ms

I reran the author's exact code on my system using Java 7 and got the following:

IndexOf: 52ms

StringTokenizer: 104ms

GuavaSplit: 119ms

Split: 145ms

So using his benchmark technique, I got the same ordering, though the ratios have changed a bit. In Java 7, split is only 3 times slower than index, rather than 7 times slower.

/* ---[ What does JMH say? ]--- */

I mildly rewrote these benchmarks using JMH. I also made longer strings to tokenize, rather than the two token string used by the blog post author, as that seemed a more realistic data set. And I used three strings, instead of one:

String[] lines = new String[]{

"0000 12 34 5 666 77 88888 99",

"aa bb cc dd ee ff gg hh ii jj kk ll mm nn oo pp qq rr ss tt uu vv ww xx yy zz",

"Lorem ipsum dolor sit amet, consectetur adipisicing elit, sed do eiusmod"

};

The JMH based benchmark code is listed below. The results are rather interesting. In the original benchmarks raw time is measure, so lower is better. With JMH, I measured throughput (the default metric) in operations/second, so higher is better:

With Java 7 (1.7.0_60-b19) the results were:

m.JavaStringSplits.indexOf thrpt 10 840.147 ± 28.553 ops/ms

m.JavaStringSplits.javaSplit thrpt 10 694.870 ± 3.858 ops/ms

m.JavaStringSplits.stringTokenizer thrpt 10 473.812 ± 8.422 ops/ms

m.JavaStringSplits.guavaSplit thrpt 10 392.759 ± 8.707 ops/ms

And with Java 8 (1.8.0_20-ea):

m.JavaStringSplits.indexOf thrpt 10 827.136 ± 30.831 ops/ms

m.JavaStringSplits.javaSplit thrpt 10 700.809 ± 7.215 ops/ms

m.JavaStringSplits.stringTokenizer thrpt 10 480.788 ± 16.793 ops/ms

m.JavaStringSplits.guavaSplit thrpt 10 386.398 ± 5.323 ops/ms

With the presumably more robust and accurate JMH model of measuring microbenchmarks, String#split moved up the ranks and handily outperforms both StringTokenizer and Guava Splitter.

My hand-coded splitter using indexOf was about 18% "faster" (higher throughput) than Java#split, so we do see some overhead in Java#split, but for most scenarios I conclude that the Javadoc for StringTokenizer is correct: use String#split for most scenarios unless you know that 1) you don't need regular expressions to tokenize and 2) string splitting is your bottleneck.

A follow-up set of tests that could be done would be on very large strings. In his Letter the Caller Choose Mechanical Sympathy post from a few years ago, Martin Thompson advocated devising an API where you pass in the datastructure to be populated during the tokenization, rather than relying one to be created in the underlying tokenization method and passed back to you. He states (but does not demonstrate):

In the Java world this idiom becomes critical to performance when large arrays are involved.

/* ---[ Find the flaw ]--- */

One of my favorite interview questions is to put a piece of code in front of someone and say "find the flaws". And Aleksey Shipilëv, the author of JMH, states:

Your [JMH] benchmarks should be peer-reviewed.

Mine have not been, so I throw it out to the internet to find the flaws in my quick analysis.

/* ---[ The code and setup ]--- */

Benchmarks were performed on:

Ubuntu (Xubuntu) 14.04 LTS with 3.13.0-37 Linux kernel with 8 Intel(R) Core(TM) i7-3610QM CPU @ 2.30GHz cores.